Designing a Mimic Therapy Robot

Timeline

Jan. 2025 - May. 2025

Tools

Arduino, Fusion 360, Python (Hume API), laser cutting

Problem

While Korean culture embraces mirror therapy (거울치료) as a concept for self-reflection and emotional growth, no physical robots exist to embody this therapeutic mirroring practice.

Solution

Sprout transforms the cultural concept of mirror therapy into a tangible therapeutic tool, using servo-driven physical features and real-time emotion detection to mirror users' facial expressions for emotional rehabilitation.

Approach

Research

cultural experience

literature review

design goals

Design

low-fi prototype

mid-fi prototype

final prototype

Evaluate

Sciencenter (Ithaca, NY)

Research

Cultural Experience

Mirror Therapy (거울치료) is a Korean cultural concept where you recognize your own behaviors, flaws, or traits only after seeing them reflected in someone else's actions - a social "mirror" that reveals blind spots about yourself.

This psychological phenomenon inspired us to explore how robotic mirroring could extend beyond self-recognition to active emotional rehabilitation.

Related Works/Literature Review

Mirror Therapy in Robotics

Existing Research:

- Traditional mirror therapy successfully treats phantom limb pain through visual feedback (Ramachandran 2016)

- Second-generation robotic/VR mirror therapy shows mixed clinical effectiveness (Darbois et al. 2018)

- FaraPy pioneered AR-based facial paralysis feedback (Barrios Dell'Olio & Sra 2021)

Research Gap:

- Few systems address facial rehabilitation or emotional mirroring.

Embodied Affective Expression

Existing Research:

- Face-tracking gestures sustain attention 3x longer than static displays (Sidner et al. 2005)

- Robotic tail movements communicate emotions through familiar canine language (Singh & Young 2013)

- Tangible expressions outperform screen-based emotions in user engagement.

Research Gap:

- No robots coordinate multiple physical features (eyebrows, mouth, tail, arm) for unified emotional expression

Real-Time Emotion Recognition

Existing Research:

- Current robots (e.g., Ryan) use discrete emotion labels with 500ms+ lag (Abdollahi et al. 2022)

- Empathic robots increase user engagement but rely on scripted responses

Research Gap:

- Modern streaming APIs detect 20+ emotional states in <200ms but aren't utilized in robotic systems.

Social Gaze Behaviors

Existing Research:

- Eye contact mediates trust, turn-taking, and social presence (Admoni & Scassellati 2017)

- Mutual gaze increases perceived attentiveness by 40%

- Physical eye movements more effective than screen-based eyes

Research Gap:

- No systems combine real-time eye-tracking input with servo-driven eye output for therapeutic applications

Design Goals

Therapeutic Mirror Feedback

- Apply neuroplastic principles from medical mirror therapy (Ramachandran 2016) to emotional rehabilitation through synchronized facial mirroring (Beom et al. 2016)

Real-Time Responsiveness

- Target <200ms latency for emotion detection and expression to maintain conversational flow

Physical Expressivity

- Replace screens with servo-driven features (eyebrows, eyelids, mouth) for tangible emotional presence

Approachable Design

- Rounded forms and neutral colors reduce user anxiety (Barenbrock et al. 2024)

- Familiar gestures (tail wagging, arm waving) signal friendliness (Singh & Young 2013; Sidner et al. 2005)

- Coordinated movements create cohesive, lifelike companion

Design

Sketches

Facial Expression Tracking and Mimicking

- Moving Eyebrows: Servo-controlled magnets move the inner eyebrows vertically to express emotions while outer portions remain fixed.

- Mouth Expression: Servo motors drive magnets via fishing line to create smiling, neutral, or frowning expressions by adjusting the mouth's outer edges.

- Squinting Eyes: Bottom eyelids rise using vertical servos and magnets to create natural squinting when smiling.

- Blinking Eyes: Top eyelids descend behind eyes via servo-magnet mechanisms to produce realistic blinking.

Motion Tracking and Eye Movement

- Moving eyes: Proximity sensors detect user position while horizontal servos move pupils along a curved track to maintain eye contact.

Low Fi

Physical Mechanism Testing

- Explored forms with cardboard prototypes, discovering servos are pretty big and we need to scale up the robot shell

- Tested functions using plastic scraps, magnets, and cardboard to test servo-magnet interactions before final build

- Laser-cut custom gear attachments to replace standard servo arms

Emotion Recognition Testing

- Used Hume API for emotion recognition and pyserial to send the detected emotion to the arduino

- Focused on identifying most frequently expressed emotions for the robot to mimic.

Mid Fi

Servo Interactions

- Implemented functionality for one eyebrow and tested its response across 5 different emotions.

- We found that the servos had inconsistent, jerky movements - added a capacitor to smooth out the movements.

- The camera was heating up too much, too quickly - changed the camera to a b/w camera.

- Broke a lot of servos.

Initial Form

- 3D printed the initial robot head shell.

- Discovered during assembly that compartments are needed to properly house the servos.

- The mouth servos did not have enough space to move.

Final Prototype

Hardware

- Used a PCA9685 PWM driver board to control 7 servo motors for coordinated facial movements (2 eyebrows, 2 mouth corners, 1 eye tracking, 1 antenna, 1 arm), with a 470µF capacitor added for power stabilization

- 3D-printed custom servo horns and redesigned the robot shell with dedicated compartments to properly house servos and allow full range of motion for mouth expressions.

- Redesigned the form with smooth, rounded contours and neutral white coloring based on research showing these aesthetic choices reduce user anxiety and increase approachability.

Software

- Used Hume AI for real-time emotion recognition with ~200ms latency, classifying detected emotions into 5 core states and sending commands via pyserial to Arduino for synchronized servo movements.

See the code

Evaluate

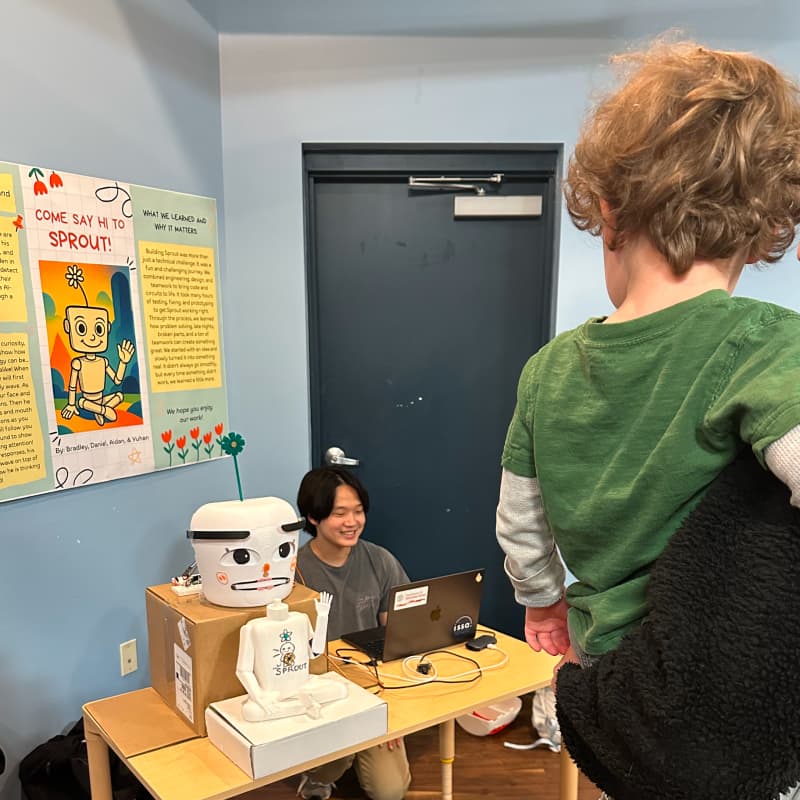

We presented our work at the Sciencenter, a hands-on science museum in Ithaca, NY.

Next Steps

- As the convention was 2 hours long, the camera started heating up too much - we would need to find a better camera or a cooler enclosure.

- The current form factor could be optimized for greater portability, enabling more natural handheld interactions that would allow children to engage with Sprout as a companion during therapy sessions.